Recent Posts

Setting up Airprint for Samsung ML 2165W

A practical guide to reviving a Samsung ML‑2165W by connecting it to a Raspberry Pi, configuring CUPS, and enabling AirPrint support for seamless printing from Windows, Linux, and iOS devices

read more

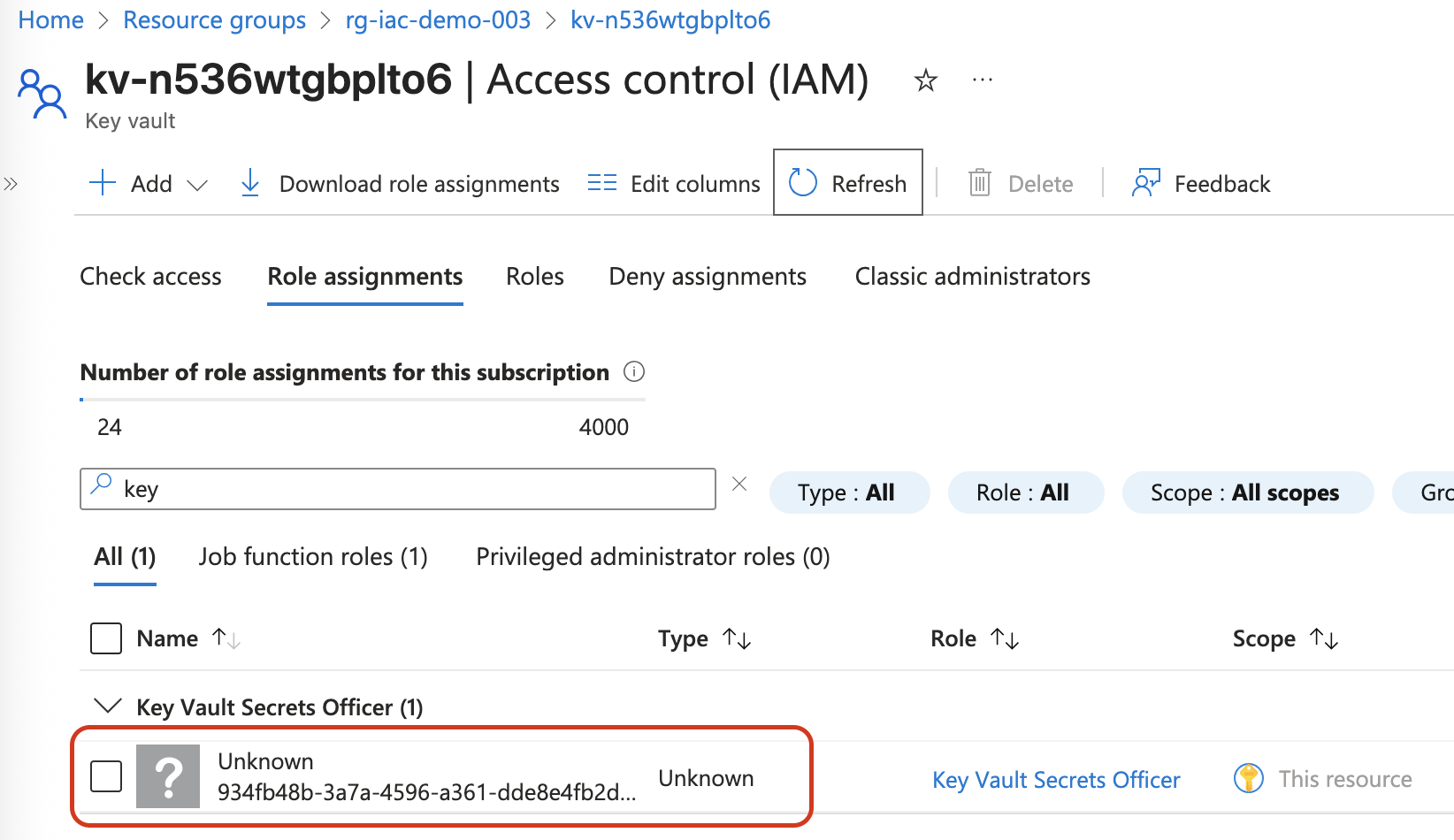

Cleaning Up Azure Role Assignments with Deployment Stacks

Classic Azure deployments often leave behind orphaned role assignments, creating clutter and confusion. In this post, I explore how Deployment Stacks—tested via GitHub Actions—offer a cleaner, more predictable way to manage and remove role assignments at the subscription level. Includes code, setup insights, and lessons learned.

read more

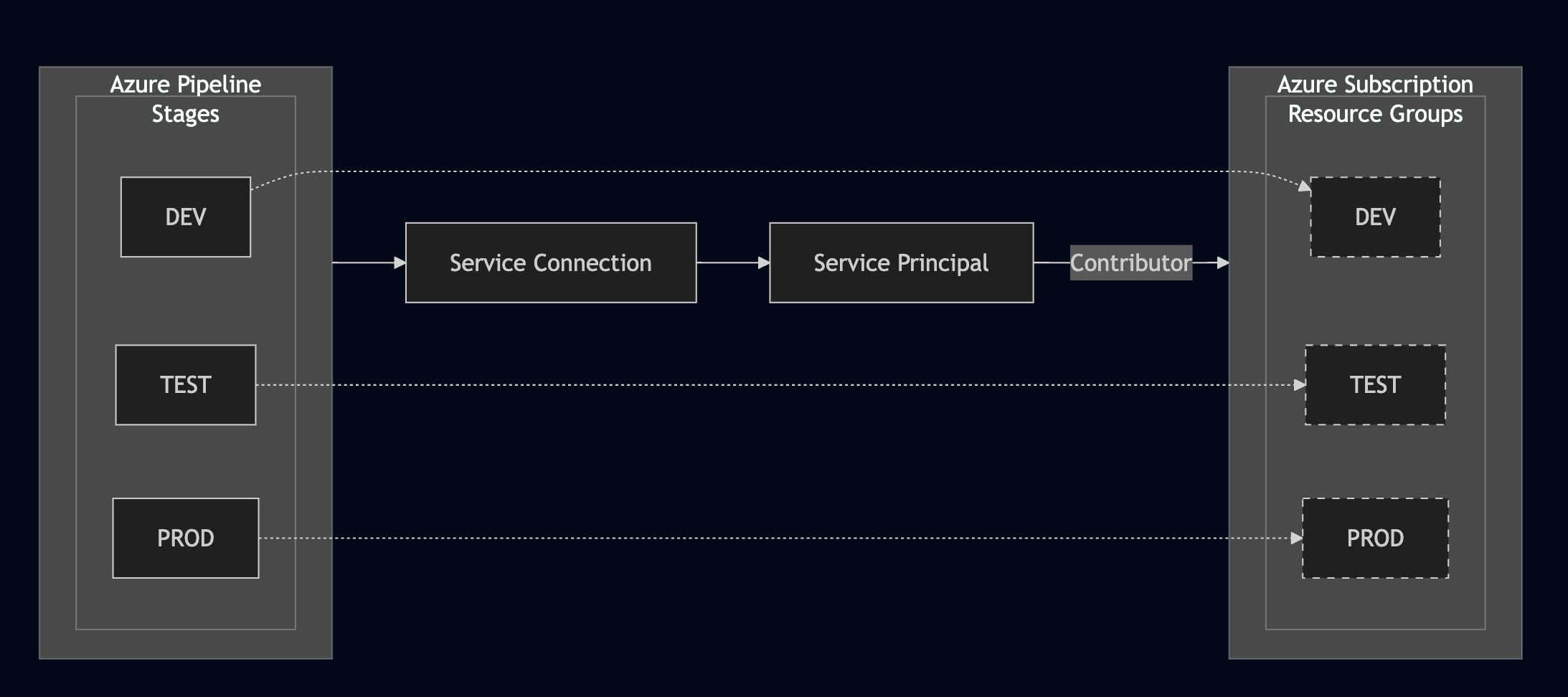

🚀 Get Started With Infrastructure-as-Code (IaC)

This guide walks you through setting up IaC with Azure DevOps using Bicep and Deployment Stacks. Even a minimal setup can make a big difference.

read more

Printing a 3D bun from a photo

Can AI really help you 3D print a bun from a photo? I decided to find out.

read more

Python for Automation in Azure DevOps

A practical guide to automating Azure DevOps Work Item updates using Python and Azure Pipelines. This post explores hosting options, AI-assisted coding, and the trade-offs of different authentication methods. It highlights a lightweight, maintainable solution for personal productivity and reflects on the evolving role of developers in an AI-driven workflow.

read more