Below you will find pages that utilize the taxonomy term “Azure”

Cleaning Up Azure Role Assignments with Deployment Stacks

Python for Automation in Azure DevOps

Getting Tokens with Azure CLI

Scoping an AppReg to Guest Only User Administration

Discord Bot From Hello World to Container Apps

Recently I Became Curious About Discord Bots and How They Work.

In this blog post, I am going to write about my learnings. While some parts might be familiar to you, I found it very amusing to start with a simple “Hello, World!” bot, run it locally, deploy it to the cloud, and integrate it with ChatGPT. Along the way, I learned a lot. I hope this post can inspire other people or at least serve as a reference to refresh my memory when I revisit this topic in the future.

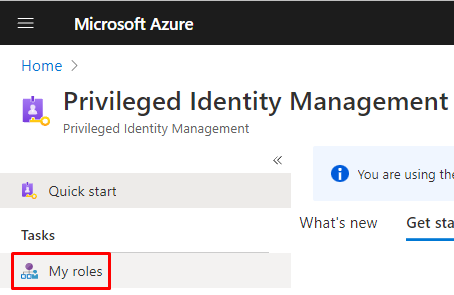

A powershell script for activating an eligible role assignment in Azure AD

Recently my role assignments in Azure AD were switched from permanent to eligible ones. This is part of PIM - Privileged Identity Management, you can read more about it on MS Docs:

To activate your eligible assignment you can use Azure Portal, Graph API, and PowerShell. The activation in the portal and Graph API is described on MS Docs:

Sites.Selected and Governance

The new permission in Graph API - Sites.Selected - is a step in the right direction. Since long we have been looking for ways of scoping the accesses to live up to the least privilege principle. It was either nothing or everything. I have tried out the new Sites.Selected permission and here are my findings.

First of all, if you haven’t heard about Sites.Selected, please visit these pages to find out more. I am skipping the introduction, since there are already good resources on that out there.

A cost effective way of running legacy scripts in the cloud

Have you also got some old huge scripts that run on a server locally? Have you also considered moving them to the cloud? Here comes an idea of how to do it quickly and easy.

In my case I have some older powershell scripts that are harder to convert to serverless applications:

- They use MSOnline module in PowerShell, hence they require rewriting to AzureAD before using them in an Azure Function

- They take around 15 minutes to complete, Azure Functions Consumption Plan is limited to 10 minutes. Of course I can split them in several parts, but I am looking for an easy way right now, I have to postpone refactoring because I am not sure if there is a real need for this script solution.

- They process a lot of data and consume more that 400 MB memory which makes it crash when I put it in a Azure Automation Runbook.

Well, maybe a Windows Server VM in Azure is the only way? While recently setting up a minecraft server and following a blog post that proposes auto shutdown and logic apps to start the server, I came up with an idea to use exactly the same approach to make it as cost effective as possible.

Flashing Trådfri lights on Azure Alerts

What if you put together Work From Home and Home Automation? Well, removing the common denominator (HOME) would mean Work Automation (sic!). I want to tell you about a tiny hobby project I have had at home, still related to work of mine: Whenever an Azure alert is triggered, my Trådfri smart light from IKEA flashes for a couple of seconds.

Summary (if you want to skip the long story below): The solution is a tiny web application. The publicly accessible url, exposed using ngrok, is registered as a webhook in an Azure Alert. It’s on Github, you’re welcome to use it as you please 😎:

Setting up a HelloWorld Azure Alert

Azure Alerts are awesome for monitoring of solutions in Azure. If you are about to set up your first Alert Rules in Azure, then it’s a guide for you. Configuring alert rules can be quite intimidating at first, with all the options, metrics, evaluation times, etc.

Here is a very very simple setup that can serve as a teaser and help you get started with the Azure Alerts.

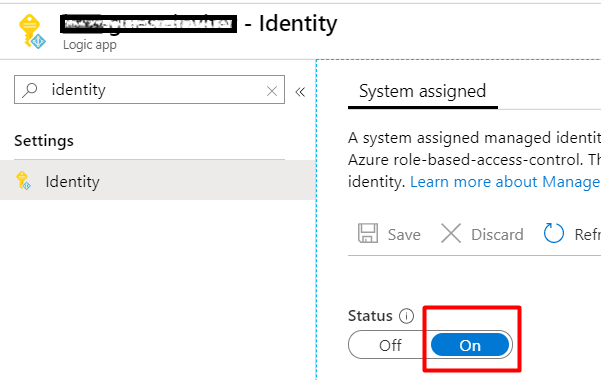

Using secrets in Logic Apps in a secure way

This is a guide for how to handle secrets in a logic app in a secure way. It combines three resources:

- Accessing Key Vault from Logic App with Managed Identity

- Get Secrets Key Vault API

- Hide your logic apps secrets from prying eyes

First, enable a Managed Identity for your Logic App:

In the KeyVault, add a new Access Policy for the new Managed Identity (from the previous step). Use the least priviliges. In my case it is just enough with GET for secrets.

Filtering Azure Table Data directly in the Azure Function Binding

Instead of filtering values from an Azure Storage Table, you can do it directly in the bindings. It might not be a solution for everything, but in the right place, it is fantastic. I was very surprised to see how little code was needed after this binding change:

For that to work, define the filter attribute in the bindings: “filter”: “(PartitionKey eq ‘{package}’)”

To try it out, add a new row in a table defined in the bindings (“metadata” in my case):

Trust gulp-connect certificate from Visual Studio Online on Mac OS

I have read and followed this awesome post:

Getting SPFx working in Visual Studio Online by SPDavid.

I got my fingers and tried that guide out. This worked good, I spent some time, though, googling (binging) around to get rid of the SSL Warnings for the remote “localhost” on my Mac.

I would like to share this simple instruction on how to trust a self signed certificate from gulp-connect on Mac OS. The implication is that the certificate is on the remote linux machine (on the Visual Studio Environment), that you are connected to through the Visual Studio Code extension.

Tips and Trick for Azure Functions

These are my favourite tips and tricks. These are only those who me and my colleguages have tried out.

Architecture tips

Keep it slim

Functions should do one thing and they should do it well. When you develop it in C# and Visual Studio, it is so tempting to develop a “microservice” in a good way, you add interfaces, implement good patterns, and all of a sudden you get a monolith packaged in a microservice. If your function grows, stop, rethink. Better to see how it input and output bindings can be used. Also orchestration with Logic Apps or Durable Functions can help.

Simple Build for dotnet new react

I created a sample ASP.NET Core app with React.

dotnet new react

Then it took a couple of hours to get the build to work. Here is my working azure-pipelines.yml:

Resources:

S01E01 IoT: Posting Temperature from Raspberry Pi to Azure

Recently I have looked more at IoT, Raspberry Pi in my spare time. In my blog post I want to share my experience in a series of posts. This post is about measuring temperature, humidity and pressure with Raspberry Pi 2 Model B and Sense Hat and posting this data to Azure Table Storage. I followed this tutorial for connecting to azure with python and these instructions for reading data from Sense Hat. The python script is on github. Along the way I learned that only python 2.x can be used with azure and table names cannot contain underscore (I got Bad Request error when I tried to create a table with the name “climate_data”). But overall, the process was straightforward. The temperature is not correct, maybe because the sensor is inbetween Raspberry Pi and Sense Hat where it gets warm. But it is just a Proof-of-Concept. I have used Visual Studio 2015 to see the data in Azure Table Storage. For that I needed to install Azure SDK 2.7. There are many other “explorers” for Azure Storage.  Other resources Accessing Azure from Linux and Mac Improvement #1 Corrected Temperature I found a formula for calculating more correct temperature on the raspberry pi forum.

Other resources Accessing Azure from Linux and Mac Improvement #1 Corrected Temperature I found a formula for calculating more correct temperature on the raspberry pi forum.