A cost effective way of running legacy scripts in the cloud

By Anatoly Mironov

Have you also got some old huge scripts that run on a server locally? Have you also considered moving them to the cloud? Here comes an idea of how to do it quickly and easy.

In my case I have some older powershell scripts that are harder to convert to serverless applications:

- They use MSOnline module in PowerShell, hence they require rewriting to AzureAD before using them in an Azure Function

- They take around 15 minutes to complete, Azure Functions Consumption Plan is limited to 10 minutes. Of course I can split them in several parts, but I am looking for an easy way right now, I have to postpone refactoring because I am not sure if there is a real need for this script solution.

- They process a lot of data and consume more that 400 MB memory which makes it crash when I put it in a Azure Automation Runbook.

Well, maybe a Windows Server VM in Azure is the only way? While recently setting up a minecraft server and following a blog post that proposes auto shutdown and logic apps to start the server, I came up with an idea to use exactly the same approach to make it as cost effective as possible.

The script solution I’ve got needs 15 minutes to complete. It runs every night. 23 hours and 45 minutes a day, the vm is not needed at all, I can stop it. Here is what I’ve tried and got working:

- A logic app that starts once a day

- It turns on the Windows Server VM

- A powershell script runs as a job scheduled to run at startup

- Once done, the powershell makes an http call to the Stop logic app

- The logic app stops and deallocates the Windows server VM

Job at startup (3)

PowerShell has a native way to register jobs that run at startup. I just followed this digestable guide:

I created a new folder: C:\Scripts

I copied my legacy script to that folder, let’s call it ‘Legacy.ps1’ for the sake of simplicity, then I created a startup job by running these two lines:

$trigger = New-JobTrigger -AtStartup -RandomDelay 00:00:30

Register-ScheduledJob -Trigger $trigger -FilePath C:\\Scripts\\Legacy.ps1 -Name Legacy

The Windows Server VM (3)

I created a Windows Server 2019 Datacenter Server Core VM to make it as lightweight as possible. I put it in a separate resource group, I didn’t reserve any ip addresses, nor dns names. I disabled even all ports including the RDP to have the highest security.

Other specs:

- CPU: 1vCPU

- Size: Standard B1ms

- RAM: 2GB

- HDD: HDD (no redundancy)

My choice of the vm image.

The start logic app (1, 2)

In the same resource group I created a logic app that turns on the VM daily at 00:13 UTC.

Easy, isn’t it.

Once started the vm triggers a scheduled - the Legacy.ps1 script.

In the end of the script there is an http call to my ‘Stop’ logic app:

Invoke-WebRequest -Uri "https://prod-32.westeurope.logic.azure.com:443/workflows/daf4a94e9d334032848f23b2651da35d/triggers/manual/paths/invoke?api-version=2016-10-01&sp=%2Ftriggers%2Fmanual%2Frun&sv=1.0&sig=45c3PJfdneqSgwU8lhrbvD09YKd0s\_L55FlxvON4Bajs"

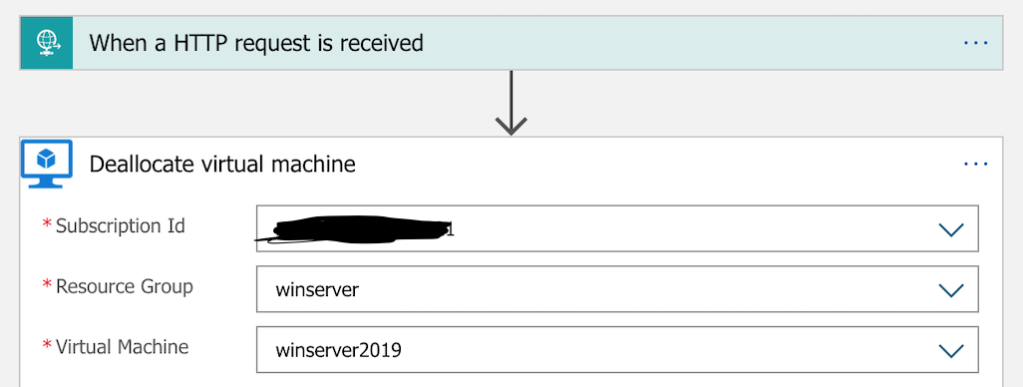

The stop logic app (4,5)

Obviously, the trigger I use in my next logic app is an http request.

Whenever it is triggered is stops and deallocates the vm.

What does it cost?

I’ll save this discussion for later. Maybe It costs more than serverless applications, for sure, but less than a vm that is on and idle for hours every day. What I propose is a workaround for running huge legacy Windows scripts on Azure, in case you don’t have time to refactor your legacy scripts.

The cost of the last three days.